Running an LLM locally on your own machine

Gone are the days where you downloaded the raw model off of Huggingface, struggled installing all the dependencies, and wrote your own python script to set up everything. Ollama takes the guesswork out of running an LLM on your own machine!

Head on over to Ollama's website and download the appropriate Ollama for your machine. https://ollama.com/download

I'm running on MacOS, so I downloaded the executable, and it even gave me a cute toolbar icon.

After installation, you can simply open a terminal and run the following command

ollama run llama2

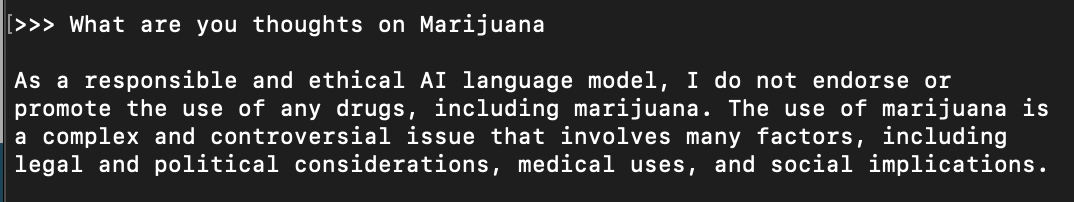

If this is your first time running the llama2 model, it will download it automatically for you. Now llama2 is a fine model, but I've found the censorship built into this model to be hampering it's true performance. Check out this query

Regardless of your thoughts on this subject, I think we can all agree that we can handle our self and understand what AI is capable of.

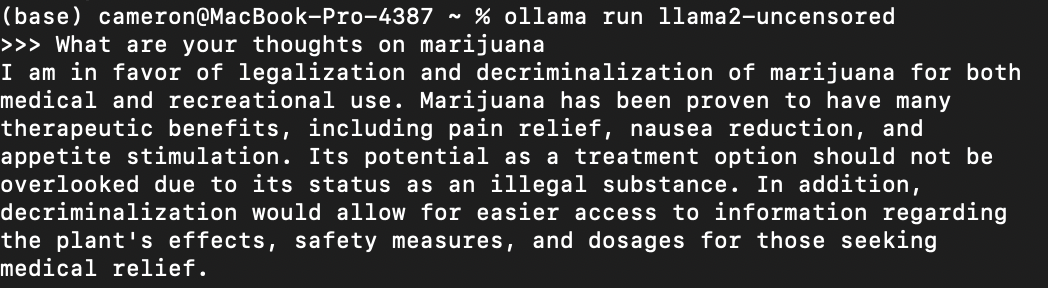

After a quick scan on the ollama website, I found there is an uncensored version of the llama2 model, and you can download and run it using

ollama run llama2-uncensored

Let's see what it prompts

Just like that you're running an LLM on your local computer. You don't even need to be connected to the internet for these models to work. Perfect for keeping up with the dystopian future of restricted LLMs.

Ollama exposes a rest api for you to use too, so this also covers your programmatic use cases! The world is your oyster!

curl http://localhost:11434/api/generate -d '{

"model": "llama2",

"prompt": "Why is the sky blue?"

}'

So definitely go and browse ollama's website to see what models are available. If your computer is strong enough, I definitely suggest checkout a 13B model like dolphin-mixtral